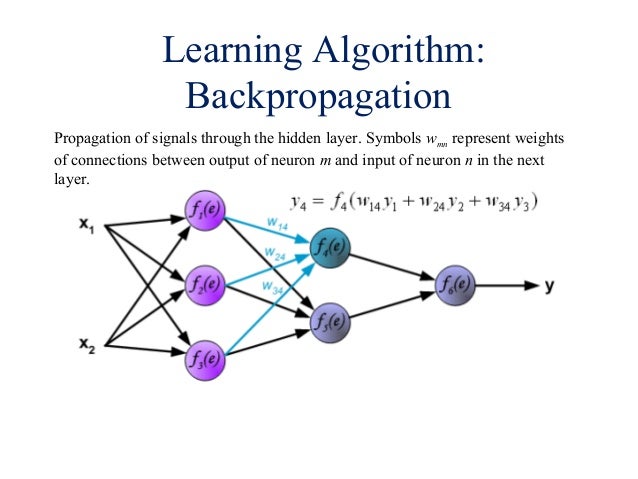

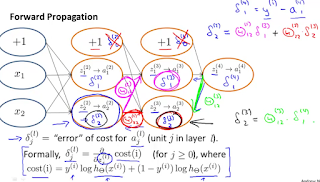

Now, let’s see how to calculate the output of the input layer in this current network. Now, we will backpropagate the error calculated at the output layer to the input layer back and we will update the weights accordingly. Then we get the final output through the output layer. The cumulative output of the hidden layer goes through the output layer as input. We feed the input to the input layer and the cumulative output from the input layer goes through the hidden layer as input. The input we feed into the neurons in the input layer can be scalar, feature vector, multidimensional matrices. All the w’s represent the weights of connections between the neurons in the different layers. In the above picture i1, i2 are input neurons, and h1, h2 are hidden neurons, and o1, o2 are output neurons. To know how the Backpropagation algorithm works? We will consider a simple neural network with three layers, one input layer, one hidden layer, and one output layer. Let’s delve in and know more about backpropagation in Deep learning. In this article, I will walk you through the process of Backpropagation and applications of Backpropagation using a three-layer neural network. After each forward transmission there occurs a backpropagation which performs the backward pass by adjusting the weights accordingly. The name backpropagation itself explains that there will be the transmission of data in the backward direction. We use backpropagation to train a model through a Chain rule method. It calculates the gradient of the respective error function concerning the weights in the neural networks. Backpropagationīackpropagation is a supervised learning algorithm that considers an error function and an ANN. Complete analysis of gradient descent algorithm. This link will help you get more clarity about the gradient descent algorithm.

And the steepest descent in the graph is the area in blue color indicated by red color arrows. Here the starting point can be any point on the graph. In the above picture, we can see the path that formed by connecting the dots which leads to the steepest descent from the starting. This is a supervised learning algorithm of Artificial neural networks that work with the help of Gradient descent. We use the backpropagation algorithm widely to train the neural networks. Backpropagation is the fundamental block to many other Neural network algorithms and at present, it is the workhorse of training in Deep learning.īackward propagation of errors is in short known as Backpropagation. Using Backpropagation, we can use neural nets to solve the previously unsolvable problems.īackpropagation was introduced in the early ’70s but it got appreciation through a research paper in 1980. Upvote 7+ This paper describes the working of Backpropagation and its importance and is much faster than several neural networks.

0 kommentar(er)

0 kommentar(er)